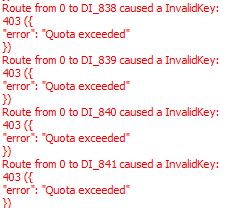

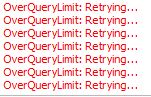

So I have been using the ORS Tools plugin with the Open Route Service API to calculate drive distance and time between two sets of geocoded addresses. It works when I use a very small subset of the data set but my total data set is very large, over 18,800 rows. When ever I try to run the ORS tools on the entire data set or even a subset of the data set with 1000 records the tool crashes. Is there a limit to how much data you can use per day with the Open Route Service API? Here are some of the error messages I get.

Hi,

I had to execute a very similar task some weeks ago. If you read the documentation of the Open Route Service API you will find out that you have a limit in the amount of requests you can send.

If you have a very large dataset you should run Open Route Service in a localhost, and this is something you can do using Docker.

Here a great tutorial in the documentation with a link to a video from SyntaxByte

Bye

Edit: after you deploy Open Route Service with Docker, in ORS tools you will just have to add your new local server as provider.

Maybe its because I am not the most tech savvy person, but I have been looking around the documentation and I have not been able to any information about how many how routes you can generate per day. I am also not very familiar with docker. If using the API in QGIS what would you say is a good number of routes to try to generate in a 24 hour period?

https://openrouteservice.org/restrictions/

It’s quite well documented what the restrictions are. They’re not restricted by number, but by total length. So you could use the distance as the crow flies between all of your start- and endpoints as a guide, to divide up all your requests into reasonable chunks, if this is a one-off operation.

But if you have to process so many requests on a regular basis, a local install would be best…

Hey,

as @mattiascalas correctly linked, all API keys are bound to one of our plans which all have a minutely and daily limit to them, regarding the amount of times you can query one endpoint.

Given the distance limit, please also note that while shortest paths are built from shortest paths (meaning if you found the shortest path from A to B, and it goes via C, then you have also found the shortest A-C and C-B path), the reverse is not true (meaning the concatenation of the shortest route from X to Y and the shortest route from Y to Z will usually not yield the shortest route from X to Z).

Given that 20000 requests is quite a lot, but would still only take 10 days with a suitable script, if this is a one-off analysis, it’s probably not worth setting up a custom instance when running on a larger (read: europe or bigger) dataset. These tend to take quite some RAM.

Depending on the type of analysis, you might be applicable for our collaborative plan, which increases daily limits to 10.000, and would make this a two-day analysis. Check the aforementioned plans for the details.

Best regards